CodeFuse是蚂蚁自研的代码生成专属大模型,根据开发者的输入,帮助开发者自动生成代码、自动增加注释、自动生成测试用例、修复和优化代码等,以提升研发效率。无论用户是初学者还是有经验的开发者,CodeFuse 都能够极大地提高编程效率和准确性。

蚂蚁集团在刚刚结束的2023外滩大会上开源了代码大模型CodeFuse,目前在魔搭社区可下载、体验。

https://modelscope.cn/studios/codefuse-ai/CodeFuse-CodeLlama34B-MFT-Demo/summary

https://modelscope.cn/models/codefuse-ai/CodeFuse-13B/summary

CodeFuse是蚂蚁集团自研的代码生成模型,能提供智能建议和实时支持,帮助开发者自动生成代码、注释、测试用例等,提高研发效率。在评测中,CodeFuse的得分超过了GPT-4和WizardCoder-34B。开源内容包括代码框架和模型。代码框架支持多任务微调,包括代码生成、翻译、测试用例生成等任务。

模型包括 CodeFuse13B-4K 和 CodeFuse-CodeLlaMa34B-MFT。CodeFuse早在6月开始内测,可用于开发助手、IDE插件等应用场景。

模型体验

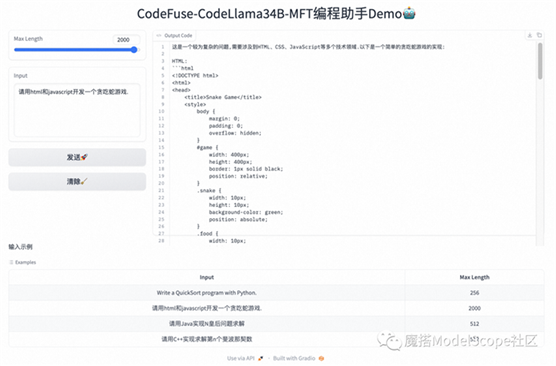

CodeFuse-CodeLlaMa34B-MFT已经上线魔搭社区创空间,开发者们可以在创空间直接体验模型的代码生成效果。

创空间链接:

https://modelscope.cn/studios/codefuse-ai/CodeFuse-CodeLlama34B-MFT-Demo/summary

模型链接及下载

CodeFuse系列模型现已在ModelScope社区开源,包括:

CodeFuse-13B模型:

https://modelscope.cn/models/codefuse-ai/CodeFuse-13B/summary

from

modelscope.hub.snapshot_download import snapshot_download

model_dir

= snapshot_download('codefuse-ai/CodeFuse-13B', revision='v1.0.0')

CodeFuse-CodeLlama-34B模型:

https://modelscope.cn/models/codefuse-ai/CodeFuse-CodeLlama-34B/summary

from

modelscope.hub.snapshot_download import snapshot_download

model_dir

= snapshot_download('codefuse-ai/CodeFuse-CodeLlama-34B', revision='v1.0.0')

模型推理

CodeFuse-13B的推理代码

import

torch

from

modelscope import AutoModelForCausalLM, AutoTokenizer, snapshot_download

model_dir

= snapshot_download('codefuse-ai/CodeFuse-13B', revision='v1.0.0')

tokenizer

= AutoTokenizer.from_pretrained(model_dir)

model =

AutoModelForCausalLM.from_pretrained(model_dir, device_map="auto",

torch_dtype=torch.float16).eval()

input_ids

= tokenizer.encode("# language: Python\ndef quick_sort(array):\n",

return_tensors="pt").to("cuda")

output_ids

= model.generate(input_ids, max_new_tokens=200)

print(tokenizer.decode(output_ids[0]))

"""Out[0]

#

language: Python

def

quick_sort(array):

if len(array) <= 1:

return array

else:

pivot = array[0]

less_than_pivot = [i for i in array[1:] if i <= pivot]

greater_than_pivot = [i for i in array[1:] if i > pivot]

return quick_sort(less_than_pivot) + [pivot] + quick_sort(greater_than_pivot)

# Test

the function

print(quick_sort([3,6,8,10,1,2,1]))<|endoftext|>

"""

CodeFuse-CodeLlama-34B的推理代码

import

torch

from

modelscope import AutoTokenizer, AutoModelForCausalLM, snapshot_download

model_dir

= snapshot_download('codefuse-ai/CodeFuse-CodeLlama-34B', revision='v1.0.0')

tokenizer

= AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True,

use_fast=False, legacy=False)

tokenizer.padding_side

= "left"

tokenizer.pad_token_id

= tokenizer.convert_tokens_to_ids("<unk>")

tokenizer.eos_token_id

= tokenizer.convert_tokens_to_ids("</s>")

model =

AutoModelForCausalLM.from_pretrained(model_dir, trust_remote_code=True,

device_map='auto',

torch_dtype=torch.bfloat16)

HUMAN_ROLE_START_TAG

= "<|role_start|>human<|role_end|>"

BOT_ROLE_START_TAG

= "<|role_start|>bot<|role_end|>"

text =

f"{HUMAN_ROLE_START_TAG}write a python function of quick

sort.{BOT_ROLE_START_TAG}"

inputs =

tokenizer(text, return_tensors='pt', padding=True,

add_special_tokens=False).to("cuda")

outputs

= model.generate(

inputs=inputs["input_ids"],

attention_mask=inputs["attention_mask"],

max_new_tokens=512,

top_p=0.95,

temperature=0.1,

do_sample=True,

eos_token_id=tokenizer.eos_token_id,

pad_token_id=tokenizer.pad_token_id

)

gen_text

= tokenizer.batch_decode(outputs[:, inputs["input_ids"].shape[1]:],

skip_special_tokens=True)

print(gen_text[0])

"""Out[0]

Here is

a Python function for quick sort:

```python

def

quick_sort(arr):

if len(arr) <= 1:

return arr

else:

pivot = arr[0]

less = [i for i in arr[1:] if i <= pivot]

greater = [i for i in arr[1:] if i > pivot]

return quick_sort(less) + [pivot] + quick_sort(greater)

```

This

function works by selecting the first element of the array as the pivot, and

then partitioning the rest of the array into two parts: one with elements less

than the pivot, and one with elements greater than the pivot. It then

recursively sorts the two parts, and concatenates them with the pivot in the

middle. It continues this process until the array is sorted.

Please

note that this is a simple implementation of quick sort and may not be the most

efficient for large lists. For large lists, a more complex version of quick

sort that uses a partition function and swaps elements in place would be more

efficient.

"""

05

数据集开源

同时,CodeFuse项目开源了两个数据集,CodeExercise-Python-27k和Evol-instruction-66k。

CodeExercise-Python-27k由2.7万道Python编程练习题(英文)组成,覆盖基础语法与数据结构、算法应用、数据库查询、机器学习等数百个Python相关知识点。

CodeExercise-Python-27k 数据集链接:https://modelscope.cn/datasets/codefuse-ai/CodeExercise-Python-27k/summary

Evol-instruction-66k是根据论文《WizardCoder: Empowering Code Large Language Models with

Evol-Instruct》中提到的方法,通过添加复杂的代码指令来增强预训练代码大模型的微调效果。 该数据是在开源数据集Evol-Instruct-Code-80k-v1基础上对数据进行了一系列处理,包括低质量过滤、HumanEval评测相似数据过滤等,从原始80k数据筛选后得到66k高质量训练微调数据。

Evol-instruction-66k数据集链接:https://modelscope.cn/datasets/

本文档由网友提供,仅限参考学习,如有不妥或产生版权问题,请联系我们及时删除。

客服请加微信:skillupvip